The Karnataka Learning Partnership recently launched a new version of their platform. This post talks about why they are building this and also some of the features and details. This is cross-posted from their blog.

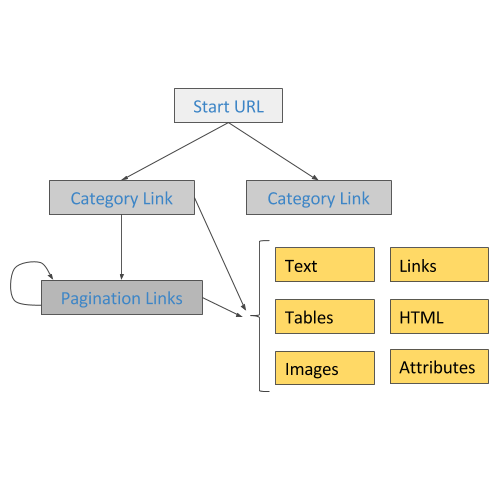

Over the past five months we have been busy rearchitecting our infrastructure at Karnataka Learning Partnership. Today, we are launching the beta version of the website and the API that powers most of it. There are still a few rough edges and incomplete features, but we think it is important to release early and get your feedback. We wanted to write this blog post along with the release to give you an overview of what has changed and some of the details of why we think this is a better way of doing it.

Data

Writing a web application which reads from multiple databases can be a difficult task. The trick is make sure that there is the right amount of cohesiveness. We are using Materialized Views in PostgreSQL. Materialized View is a database object that stores the result of a query in a on-disk table structure. They can be indexed separately and offer higher performance and flexibility compared to ordinary database views. We bring the data in multiple databases together using Materialized Views and refreshing them periodically.

We have a few new datasets – MP/MLA geographic boundaries, PIN code boundaries and aggregations of various parameters for schools.

API

We have a fairly strong Python background and it has proven to be sustainable at many levels. Considering the skill-sets of our team and our preference for readable, maintainable code, Django was an obvious choice as our back-end framework. Django is a popular web development framework for Python.

Design

For our front-end, we were working with the awesome folks at Uncommon, who provided us gorgeous templates to work with. After lengthy discussions and evaluating various front-end frameworks, we felt none of them quite suited what we were doing, and involved too much overhead. Most front-end frameworks are geared toward making Single Page Apps and while each of our individual pages have a fair amount of complexity, we did not want to convert everything into a giant single page app, as our experience has shown that can quickly lead to spiraling complexity, regardless of the frame-work one uses.

Features

For the most part, this beta release attempts to duplicate what we had in v10.0 of the KLP website. However, there are a few new features and few features that have not yet made it through and a number of features and improvements due in future revisions.

Aside from the API, there are a few important new features worth exploring:

-

The compare feature available at the school and pre-school level. This allows you to compare any two schools or pre-schools.

-

Planned Improvements: The ability to compare at all and any levels of hierarchy; a block to a block or even a block to a district etc.

-

-

The volunteer feature allows partner organisations to post volunteer opportunities and events at schools and pre-schools. It also allows users to sign up for such events.

-

Planned Improvements: Richer volunteer and organisation profiles and social sharing options.

-

-

The search box on the map now searches through school names, hierarchy (district, block etc.) names, elected representative constituency names and PIN Codes.

-

Planned Improvements: To add neighbourhood and name based location search.

-

-

An all new map page powered by our own tile server.

-

Our raw data page is now powered by APIs and the data is always current unlike our previous version which had static CSV files.

-

Planned Improvements: To add timestamps to the files and to provide more data sources for download.

-

Now that we have a fairly stable new code base for the KLP website, there are a few features from the old site that we still need to add:

-

Assessment data and visualisations of class, school and hierarchy performance in learning assessments needs to be added. The reason we have chosen not to add it just yet is because we are modifying our assessment analysis and visualisation methodology to be simpler to understand.

-

Detail pages for higher levels of aggregation – like a cluster, block and district with information aggregated to that level.

-

A refresh of the KLP database to bring it up to date with the current academic year. All these three have not been done for the same reason; because this requires an exhaustive refactor of the existing database to support the new assessment schemas and aggregation and comparison logic.

Aside from the three above, we have a few more features that have been designed and written but did not make it in to the current release.

-

Like the volunteer workflow, we have a donation workflow that allows partner organisations to post donation requirements on behalf of the schools and pre-schools they work with for things these schools and pre-schools require and other in-kind donations. For example, a school might want to set up a computer lab and requires a number of individual items to make it happen. Users can choose to donate either the entire lab or individual items and the partner organisation will help deal with the logistics of the donation.

Our next release is due mid-October to include the volunteer work flow and squish bugs. Post that, we will have a major release in mid-January with the refactored databases and all of the changes that it enables and all the planned improvements listed above. And yes, we do have a mobile application on our minds too.

The DISE application will be updated with the current years data as well by November. We will also add the ability to be able to compare any two schools or hierarchies by December.

So that’s where we are, four years on. The KLP model continues to grow and we now believe we have a robust base on which to rapidly build upon and deploy continuously.

For the record, this is version 11. 🙂