Last Friday (12th April), a DataMeet-Up was organised in Sarai-CSDS, New Delhi.

There has been talk for a meeting like this in Delhi for long now. A few hackathons and data-related events have been organised in the past (see this and this). With the recently concluded Open Data Camp in Bangalore and the substantial buzz (at least in Delhi) created by the 12th Plan Hackathon earlier this month, the timing of this DataMeet-Up was quite apt to take a step back, focus on big picture issues, and keep building the open data community in Delhi.

The meet-up began with a round of introductions, and a brief discussion about the DataMeet group and its (Bangalore-centric) history.

This was followed by a discussion of the 12th Plan Hackathon experience. The presence of members of prize winning teams (from the IIT Delhi venue) and representatives of the NDSAP-PMU (NDSAP Project Management Unit) team energised the conversation. The hackathon was found to be a positive experience overall. It especially succeeded to create a set of initiatives to address the difficult task of making planning documents, statistical evidence and proposals for allocation more accessible to (at least some of) the people of the country. The demanding nature of the datasets and documents made available for the hackathon (in terms of the required background understanding of the themes) perhaps led to a number of submissions to engage with issues not directly associated to the 12th Plan.

From the experiences of the hackathon, we quickly moved to talk about ‘what’s next?’. Responding to the demand for a public API to access the datasets hosted at the data portal, Subhransu and Varun from NIC talked about the ongoing efforts to develop the second version of the OGPL software that the data portal uses. Unlike the existing version, the second version will not host uploaded datasets as individual files but as structured/linked data (following RDF specifications). While this was of great interest to some of the participants in the meet-up, it was not immediately clear to everybody why this shift (from separate data files to structured/linked data) is such a big deal. So we spent some time discussing semantic web, linked data and API.

Another thread of ‘what’s next?’ discussion explored official and un-official processes for requesting government agencies to publish specific datasets on the data portal, and also on how (and whether) non-government agencies can share cleaned-up government datasets through the data portal. We talked about approaching Data Controllers for the agencies concerned, endorsing each other’s data requests to drive community-based demands, and also the possibility of an alternative portal (perhaps using OGPL itself) to share governmental datasets cleaned up and reformatted by individuals and organisations. The group also noted and celebrated the initiative from NIC to use GitHub for storing and sharing code and data used in visualisations, data cleaning processes and data-based applications.

The challenge of unclear licensing of data, both in case of bought data products (such as Census of India and Nation Sample Survey) and publicly available datasets, was flagged but was not discussed fully.

Next, we had a round of inputs from the participants on what kind of data they have been collecting and working with, and using what softwares.

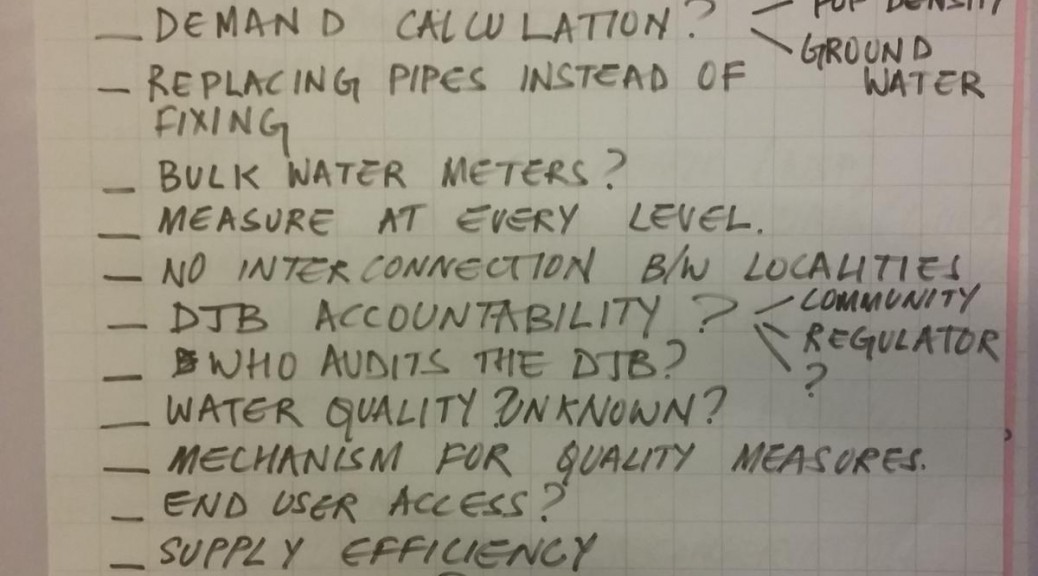

Akvo is working closely with organisation(s) that collect substantial amount of district-level data in certain focus regions, including water infrastructure, quality and usage data. For field level data collection, they use/promote a self-developed Android-based software called FLOW. They mentioned that absence of good quality basemaps (either vector or satellite imagery-based), for the areas they are working in, makes environmental data collection rather difficult. The changed Google Map API terms of use is forcing them to consider other options and move off Google (Satellite) Maps. It was suggested that they should try using imagery from Bhuvan. They also expressed interest in using data from and contributing to the OpenStreetMap project.

Accountability Initiative, located in the Centre for Policy Research in New Delhi, collects, digitises, cleans up, uses, analyses and archives substantial volume of national and state budget data and utilisation reports. They, however, cannot share the (digitised and cleaned up) data publicly due to ambiguous and missing licence agreements. They are producing large volume of data analysis but relatively lesser amount of visualisations. They mostly use Microsoft Excel and Stata for their data operations. Picking up the thread from Vibhu (of Accountability Initiative), Subhransu (of NIC) talked about the data cleaning challenges NIC is facing while working with various government agencies to open up their respective datasets.

Ravi and Pratap talked about the data usage situation in the journalism world. They mentioned that most journalists prefer accessing government data in hard (printed) copies, as that is seen as a permanent, easily archivable, and easily accessible (without knowing programming and data-wrangling skills) format. RTI remains one of the backbones of investigative journalism, and almost the entire volume of government data obtained through RTI gets stored in printed format (all over the journalists’ offices). The barrier of programming skills is the most important factor keeping Indian journalists away from more explorative and in-depth usage of government data.

In his quick update on the students’ scene in Delhi, Parin told us that there is little excitement around data analysis, management and visualisation. The group found this troubling. In a later discussion, maybe we can talk about it more and develop plans for engaging students to work with government (and non-government) data.

We briefly discussed GapMinder and Ushahidi, and data visualisation work by the teams at the New York Times and the Guardian. These examples are well-known but how to recreate them (in a different context) is often not very clear.

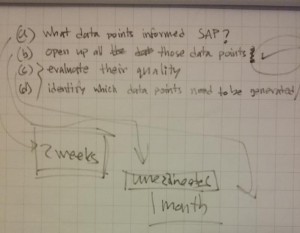

At the end we went back to a question regarding the quality of data published in the data.gov.in portal that was raised earlier in the community interaction session of the NDSAP workshop held on 4th April 2013. We were informed by Subhransu and Varun that the data shared on the portal goes through a three-stage quality-checking procedure — (1) first, the data to be shared is put together and rechecked by the Data Creators (who are headed by a Data Controller) in the government agency concerned, (2) the Data Controller of the agency undertakes the second stage of quality checking, and (3) finally the data is shared with the NDSAP-PMU team at NIC, who rechecks the data before approving its uploading to the portal. If required, the NIC team asks the agency to share the raw data for comparing with the (formatted) shared data. Vibhu raised a crucial question about how dependable and representative are such ‘raw data’ collected by the central government agencies.

As the questions were getting tougher and the evening older, we concluded the meeting. The next meeting will be sometime in mid-May. exact date and venue is to be decided.

Participants:

Guneet Narula, Sputznik

Amitangshu Acharya , Akvo

Isha Parihar, Akvo

Ravi Bajpai, Indian Express

Vibhu Tewary, Accountability Initiative

Pratap Vikram Singh, Governance Now

Shashank Srinivasan, Independent

Subhransu, NIC

Varun, NIC

Parin Sharma, Independent

Sumandro Chattapadhyay, Sarai-CSDS