(Suvajit is a member of DataMeet’s Transportation working group, along with Srinivas Kodali, we are working on how to make more transit related data available.)

Mobility is one of the fundamental needs of humanity. And mobility with a shared mode of transport is undoubtedly the best from all quarters – socially, economically & environmentally. The key to effective shared mode of transport (termed as Public Transport) is “Information”. In India cities, lack of information has been cited as the primary reason for deterrence of Public Transport.

Transport Agencies are commissioning Intelligent Transport Systems (ITS) in various mode and capacity to make their system better and to meet the new transport challenges. Vehicle Tracking System, Electronic Ticketing Machines, Planning & Scheduling software are all engines of data creation. On the other side, advent of smart mobile devices in everyone’s hand is bringing in new opportunities to make people much more information reliant.

But the demand for transit data is remarkably low. The transit user and even transit data users like City Planners should demand for it.

The demand for Public Transport data in India should be for the following aspects:

A. Availability

To make operation and infrastructure data of Transport operators easily available as information to passengers in well defined order to plan their trip using available modes of Public Transport.

B. Interoperability

To make transit data provided by multiple agencies for different modes (bus, metro, rail) usable and make multi modal trip planning possible.

C. Usability

To publish transit oriented data in standard exchange format across agencies in regular frequencies to provide comprehensive, accurate and updated data for study, research, analysis, planning and system development.

D. Standardisation

To be a part of Passenger charter of Transport Operators to publish their data in standard format and frequency. This can also serve as a guideline for Transporter Operator while commissioning any system like Vehicle Tracking System, ITS, Passenger Information System, website etc.

What kind of Transit data is needed ?

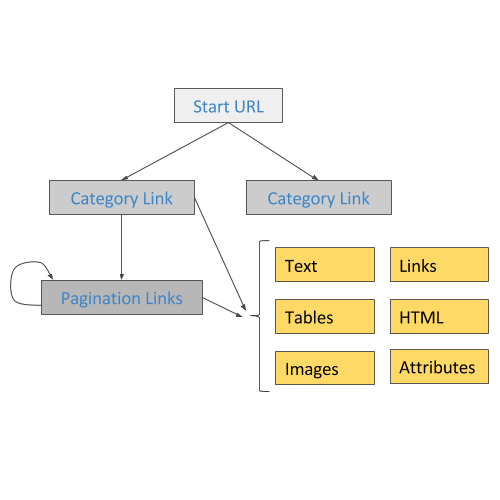

It will comprise of data on bus stops, stations, routes, geographic alignment, timetables, fare charts. With this dataset, general information on transit service can be easily gathered to plan a journey. Trip Planning mobile apps, portals etc can consume this data to provide ready and usable information for commuters.

A commuter is driven by lot of anxieties when they depend on public transport mode. Some common queries; “When will the bus arrive ?”, “Where is my bus now?”, “Will I get a seat in the bus ?”, “Hope the bus has not deviated and not taking my bus stop.”.

Answer to all this queries can be attended via real time data like Estimated Time of Arrival (ETA), Position of the vehicle, Occupancy level , Alert and Diversion messages etc. Transport Operator equipped with Tracking systems should be able to provide these data.

- Operational & Statistical Data

A Transport Operators operational data comprises of ticket sales, data of operation infrastructure and resources like Depots, Buses, Crew, Workshops etc. As operatore are tending towards digital mode of managing these data it also makes a good option to publish them at regular intervals.

A general commuter might not be interested in this data, but it will very useful for City Planners to analyse the trend of commute in the city and make informed decision. City transport infrastructure can be planned to orient it towards transit needs and demands.

The transport agency can benefit highly by demonstrating accountability and transparency. They can uplift their image as a committed service provider thereby gaining for passengers for their service.

So, together it will make a thriving landscape, if the data creators of Public Transport in India provide their data in Open which can be consumed by a larger set of people to build platforms, applications, solutions for transport study, analysis & planning across different section of users.

Open Transit Data is the tipping point for Smart Mobility in India.

That is why we have started putting our thoughts together and began writing an Open Transport Data Mainfesto.